The integration of AI into customer service operations has revolutionized how SaaS businesses approach support. AI-powered agents like chatbots and virtual assistants have enabled companies to scale their support functions, reduce operational costs, and provide consistent service around the clock.

However, with the widespread adoption of these technologies comes the need for a new approach to performance measurement. Traditional customer service metrics, designed for human interactions, fall short when applied to automated systems.

This article provides a comprehensive guide to the most important metrics for evaluating AI customer service agents.

From customer satisfaction to intent recognition accuracy and business impact, you’ll discover how to track, interpret, and improve each metric to ensure your AI investment is generating measurable results.

Understanding AI Customer Service Agents

AI customer service agents differ fundamentally from human agents. They are capable of handling thousands of customer interactions simultaneously, operate without fatigue, and continuously learn from new data.

These agents typically fall into three categories: chatbots, voice bots, and hybrid systems. Chatbots are commonly used for text-based support and can be deployed across websites, apps, and messaging platforms.

Voice bots handle spoken interactions and are frequently used in call centers or voice assistants. Hybrid AI agents combine the automation of AI with the escalation abilities of human agents, enabling seamless transitions for more complex queries.

The key advantage of AI agents lies in their scalability and efficiency, but measuring their success requires a different set of metrics. It’s not just about how many tickets they resolve; it’s about how well they understand customer intent, provide accurate responses, and ultimately contribute to a positive customer experience and business growth.

Core Pillars of Measuring AI Agent Success

To holistically assess the performance of AI customer service agents, it’s useful to group metrics into four core categories:

- Customer Experience Metrics: These evaluate how customers perceive their interactions with the AI.

- Operational Efficiency Metrics: These measure how well the AI improves support processes.

- AI Intelligence & Learning Metrics: These assess how accurately the AI understands and learns from interactions.

- Business Impact Metrics: These focus on the broader value AI provides, including cost savings and revenue impact.

Key Metrics to Track

A. Customer Experience Metrics

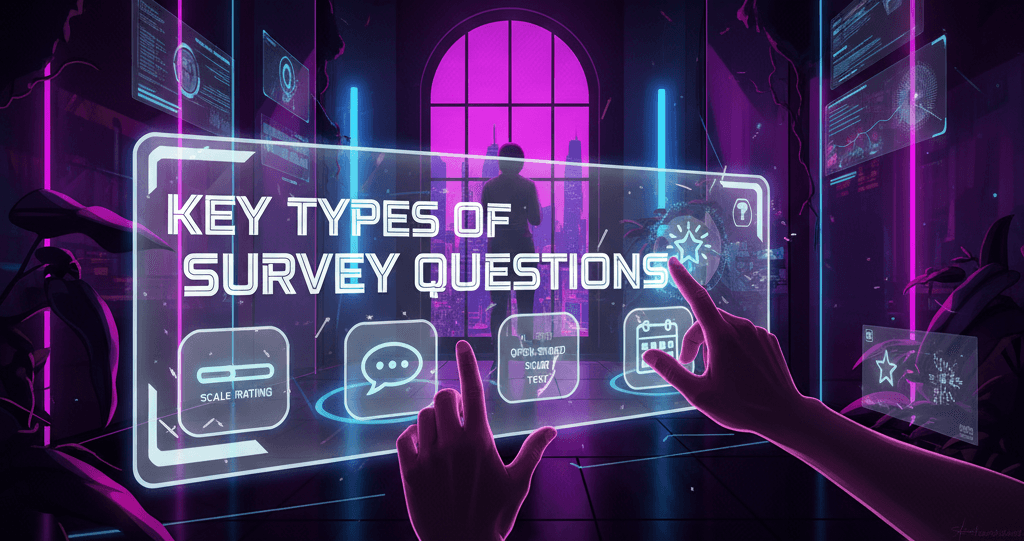

Customer Satisfaction Score (CSAT): Measures user satisfaction after AI interaction, typically via a 1–5 rating. High CSAT indicates helpful, relevant AI responses.

Net Promoter Score (NPS): Gauges whether users would recommend the brand after interacting with AI. Reflects user trust and loyalty.

Resolution Rate: Tracks the percentage of issues fully resolved by AI without human help. High resolution means the bot handles queries effectively.

Customer Effort Score (CES): Indicates how easy the interaction was for users. Lower effort means streamlined, clear support.

Bot Abandonment Rate: Percentage of users who leave before getting a resolution. High abandonment can suggest slow responses or poor design.

B. Operational Efficiency Metrics

First Response Time (FRT): Time taken for the bot to reply. Ideally instantaneous, but real-world performance may vary.

Average Handle Time (AHT) for Escalations: Measures the time from AI handoff to issue resolution by a human. Lower AHT means the AI gathered helpful context.

Containment Rate: The proportion of queries resolved fully by the AI. A critical metric for assessing automation effectiveness.

Escalation Rate: Percentage of queries requiring human intervention. High rates may indicate intent recognition or knowledge base issues.

Concurrency Handling: Measures how many users the AI can help simultaneously. Key for understanding system scalability.

C. AI Intelligence & Learning Metrics

Intent Recognition Accuracy: Tracks how often the AI correctly interprets user questions. Critical for effective response generation.

Fallback Rate: Measures how frequently the bot fails to understand queries. High fallback means poor training or unexpected input.

Retraining Frequency: Indicates how often the bot is updated. Regular updates help the AI adapt and improve.

Knowledge Base Utilization: Evaluates how often the bot pulls from existing support content. Effective use reduces repetitive support work.

D. Business Impact Metrics

Cost Per Resolution (AI vs Human): Comparing the cost efficiency of AI and human-led support shows financial ROI.

Deflection Rate: Reflects how many support requests are resolved without creating a new ticket. High deflection reduces agent workload.

Revenue Influence: Tracks how AI contributes to conversions, upsells, or renewals. Especially useful in SaaS environments.

Customer Retention & Churn Metrics: Indicates how AI affects long-term loyalty. Poor support leads to churn; effective AI aids retention.

SLA Compliance Rate (AI vs Human): Compares how well AI meets service level goals against human agents.

How to Benchmark & Set Goals for Each Metric

Benchmarks provide context. For example, CSAT above 80% is strong, containment rates between 60–80% indicate effective bots, and intent recognition should exceed 90% in mature systems.

Set goals based on your product complexity, support volume, and user expectations. Start with industry averages, then fine-tune based on your historical data.

Tools and Dashboards to Monitor These Metrics

Use built-in analytics in tools like Zendesk, Intercom, and Freshdesk to monitor metrics like CSAT, resolution rates, and containment. Platforms such as GA4, Power BI, Looker, and Tableau allow advanced visualization.

For AI-specific analysis, tools like Chatbase and Observe.AI track fallback rates, intent matching, and NLP performance.

How to Improve AI Agent Performance Using These Metrics

Improving performance begins by analyzing metrics. High fallback rates point to training issues. Update training data, refine intents, and test new flows. For high escalation rates, enhance knowledge base integration.

Continually test and retrain the AI based on real interactions and feedback to close gaps and improve over time.

Real-World Examples or Case Studies

A SaaS company reduced escalation by 35% through weekly AI retraining and flow optimization. Another HR tech firm improved CSAT from 72% to 90% by simplifying bot prompts and updating content based on customer effort insights.

These examples show how metric-based improvements drive real business outcomes.

Common Pitfalls When Measuring AI Agent Success

Don’t over-prioritize containment at the expense of customer satisfaction. High automation is meaningless if users are frustrated. Also, ensure high-quality training data.

Avoid ignoring escalation quality and establish a regular loop of measurement, improvement, and retraining. AI success depends on iteration, not set-it-and-forget-it implementation.