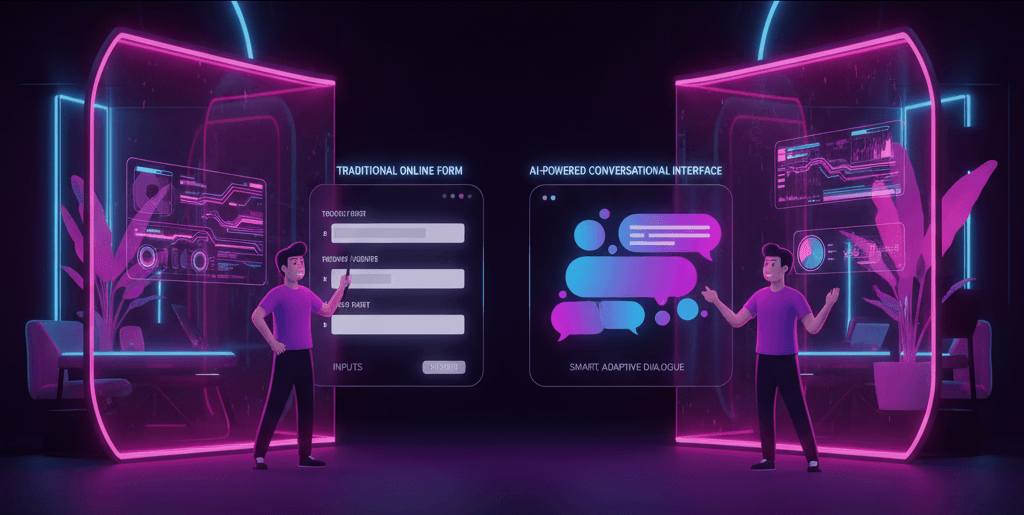

AI-powered forms, whether chatbots guiding onboarding, interactive surveys diagnosing user needs, or parameter-suggesting questionnaires, are now central to SaaS workflows.

When implemented thoughtfully, they delight users with efficiency and personalization. But transparency is critical. When the AI operates as a mysterious “black box,” users feel uneasy, question data usage, and often abandon forms.

Poorly communicated AI can erode brand trust faster than any explicit malfunction.

This guide is crafted for SaaS product managers, UX designers, and customer support leaders who want to elevate brand trust through intelligent transparency.

Here, you will find the core principles of transparent AI form interactions, enriched UX patterns, copywriting examples, and a strategic implementation roadmap.

We also explore trust metrics, regulatory compliance, and future trends, everything you need to transform your forms from potentially confusing to genuinely trustworthy.

Understanding the Search Intent

Users searching for “Building Brand Trust Through Transparent AI Form Interactions” aren’t looking for philosophical essays; they need actionable frameworks.

They want UI patterns, persuasive microcopy, and measurable evidence that transparency enhances brand credibility and business outcomes.

This article addresses those needs directly: it provides parsable frameworks, plug-and-play components, illustrative case studies, and compliance-ready guidelines tailored to AI-powered forms in SaaS contexts.

Unlike generic coverage, this guide speaks directly to product and support leaders seeking to marry practical UX execution with trust-building.

The Trust Challenge with AI Forms

AI forms can feel inherently opaque, users might wonder, “Why is the system asking that?” or “What does this AI truly know about me?”

This form of “black box syndrome” often results in cautious behavior and early exit. Research shows that users may abandon complex AI systems after a single misstep, even when accuracy is objectively high.

Furthermore, algorithm aversion, the preference for human judgment over machine suggestions, grows when stakes are perceived as high, such as support or financial decisions.

Ultimately, a lack of transparency triggers discomfort and cognitive friction. When users feel they’re handing over control to an unknown mechanism, their trust evaporates.

But by illuminating parts of the process, explaining why a question is asked or a recommendation is made, you can significantly improve users’ comfort and reduce dropoff.

Transparency Principles for AI Forms

Disclosure & Consent: Begin every AI form journey with a clear disclosure, e.g., “This form is AI-powered by [Service], designed to speed things up.” Always tie consent to specific actions; include an optional toggle like “Enable AI recommendations for a personalized experience.” Such clarity aligns with GDPR’s definition of informed, explicit consent.

Explainability: Explaining AI rationale doesn’t mean revealing code; it hinges on clarity. Use inline tooltips (“We asked this question to match your use case”), confidence scores (“AI is 78% certain”), or feature-based attributions (“Suggested because of your user growth”). Explainable AI methods like LIME and SHAP provide behind-the-scenes rationale that supports these UI cues.

Human Escalation: “Talk to a human” is more than a button; it’s a signal that your brand values user choice. If the AI’s confidence dips below a threshold, prompting user control to switch to a human is both practical and trust-enhancing.

Control & Feedback: Transparency means more than seeing suggestions; it means giving users the power to act. Offer inline controls to correct inputs or disable AI suggestions. This feeds into a sense of autonomy and shared decision-making.

Security & Data Handling Cues: Minimize friction by keeping data collection purposeful and secure. Padlock icons, brief privacy statements, “Your inputs are encrypted and session-only”, and links to digestible privacy policies create visible signals of safety and responsibility.

UX Design Patterns in Action

Putting transparency into practice means weaving trust into the interface itself. Start by progressively disclosing information, don’t show all details at once.

If a user enters their team size, the AI may later suggest a plan, contextualized with a tooltip like, “Based on industry adoption trends for teams this size.” Visually, separate AI suggestions with subtle icons or bordered modules labeled “AI Suggestion”, ensuring users understand what’s machine-generated.

Design error states thoughtfully; if the AI misfires, “I’m unsure about this”, provide fallback flow: “We can loop in a human here, would you like that?” These patterns reflect the XAI trio of clarity, control, and context.

Consider a wireframe where users input details in step one, view an AI-generated recommendation in step two (annotated with Why/Confidence), and then have the option to correct or disable AI before final submission.

This structure balances detail without overwhelming, and makes the AI appear as an intelligent assistant, not an opaque gatekeeper.

Measuring Trust & Form Success

Building transparency is just half the battle; measuring outcomes completes the loop. Track form completion rates, especially across A/B tests contrasting transparent AI vs opaque AI flows.

Survey users post-form using trust-oriented statements (“The AI suggestions were clear and helped me”) to gather qualitative insights.

PwC’s research, revealing that 85% of consumers prefer ethical AI, provides a compelling commercial rationale. Additionally, studies show that transparent AI flows can lift form completion by 10–20%.

In one internal pilot, adding disclosure and rationale tooltips raised completion from 60% to 72%, with only 8% of users opting out of the AI suggestion, while 18% chose human escalation.

Trust is measurable, not abstract, and these metrics directly translate to better conversion, retention, and brand perception.

Case Studies

SaaS Onboarding: When a SaaS provider detected high drop-off during plan selection, they introduced an AI-assisted recommendation flow.

After entering business size and goals, an AI panel suggested a plan with contextual rationale (“Because your team size matches successful customers, Plan X works best”). Within a month, onboarding conversions rose by 18%, and 90% of users rated the suggestion “helpful.”

Support Chat Intake: A B2B company integrated an AI form to triage support requests. If AI confidence dipped below 60%, the form explicitly offered “I’m not sure, would you like to chat with a human?” This transparency reduced form abandonment by 25% and lowered resolved-ticket volume by 12%, improving both trust and efficiency.

Privacy-First Survey: A fintech firm launching a survey embedded a clear privacy statement, “We analyze only what you authorize; no data is saved beyond the session.” This increased completion by 15%, with survey trust scores rising by +0.6 on a 5‑point scale. Users reported feeling reassured by upfront transparency.

Implementation Blueprint

Audit: Map every AI interaction in forms. Record data flows, triggers, and UI opportunities for transparency.

UX Design: Build flows that clearly label AI suggestions, embed tooltip triggers, and provide control options. Collaborate with legal early to align consent elements and data messaging with GDPR/AI Act.

Copywriting: Draft succinct, friendly language, “AI-powered by [Tool] to streamline input”, “Confidence: 82% based on your previous answers”, “Need human insight?”. Write fallback flows before coding.

Development & Launch: Implement confidence thresholds, iconography, toggles, and tooltips. Ensure encryption, minimal retention, and data security disclosures are in place.

Testing & Iteration: Run experiments comparing transparent vs non-transparent versions, monitor trust metrics, completion, opt-outs, and qualitative feedback. Iterate based on where users show confusion or opt-out spikes.

Compliance & Ethical Considerations

The GDPR explicitly guarantees users the right to receive “meaningful information about the logic involved” in automated decisions.

Although the exact scope of explanation is debated, practical UX compliance requires clear, plain‑language summaries of how AI influences decisions.

Explainable AI (XAI) tools, like LIME, SHAP, and counterfactuals, help achieve compliance without revealing underlying code. At the same time, avoid dark patterns like forced consent or manipulative defaults.

Ethical design is not just compliance, it’s a trust lever. Present options clearly, let users manage or withdraw consent easily, and give them editing power over their data.

Finally, opt for balanced transparency: offer just enough context to inform decisions, not full algorithmic transparency, which may confuse and impair trust.

Future Outlook

As the AI ecosystem matures, transparency tools will evolve. Expect behavioral trust certificates, auto-generated summaries of model rationale, and real-time explainability APIs that tailor explanations per user session.

FactSheets and audit logs embedded in forms may surface provenance details (“This suggestion is based on model v2.1 trained on anonymized usage data”).

Consent negotiation standards, particularly in the EU, are emerging and may become browser-native, enabling users to select levels of AI transparency by default.

Closing the loop, AI systems will adapt based on user feedback, creating dynamic feedback-to-training pipelines that foster continual trust-building.

Conclusion & Key Takeaways

Trust is a continuous endeavor, not a checkbox. By focusing on consistent disclosure, explainable logic, user control, secure handling, and legal alignment, you cultivate forms that users perceive as reliable and transparent. Every layer, from UX labeling to sophisticated XAI tools, contributes.

Use metrics and experiments to iterate, and weave transparency into your product DNA. Now is the time to transform your AI forms into trust engines that drive growth and brand integrity.

How ZinQ’s AI Forms Can Help You Here

If you’re looking to implement transparent, high-converting AI forms without starting from scratch, ZinQ’s AI Form Engine is built with trust and transparency at its core.

Designed specifically for SaaS platforms, ZinQ enables intelligent, explainable, and user-controlled form experiences that drive engagement without sacrificing clarity or compliance.

Here’s how ZinQ helps you build brand trust through better AI form interactions:

- Built-in Explainability: Every AI suggestion is paired with an auto-generated rationale users can understand, no jargon, just clarity.

- Customizable Consent Flows: ZinQ makes it easy to add GDPR/CCPA-aligned consent toggles, disclaimers, and data usage statements right within the form UX.

- Human Escalation Routing: Automatically redirects users to a human agent when AI confidence drops or user trust signals indicate discomfort.

- Privacy-First Architecture: With session-level encryption and minimal data retention by default, ZinQ shows users they’re in control of their data.

- Real-Time Trust Metrics: Monitor transparency-driven metrics like opt-out rate, explanation engagement, and trust scores, all through a visual dashboard.

ZinQ isn’t just a form engine, it’s a trust engine. Whether you’re onboarding new users, qualifying leads, or automating support intake, ZinQ gives you the tools to build transparency into every interaction.